Department of Mathematical Sciences

850 West Dickson Street, Room 309

University of Arkansas

Fayetteville, AR 72701

P 479-575-3351

F 479-575-8630

E-mail: math@uark.edu

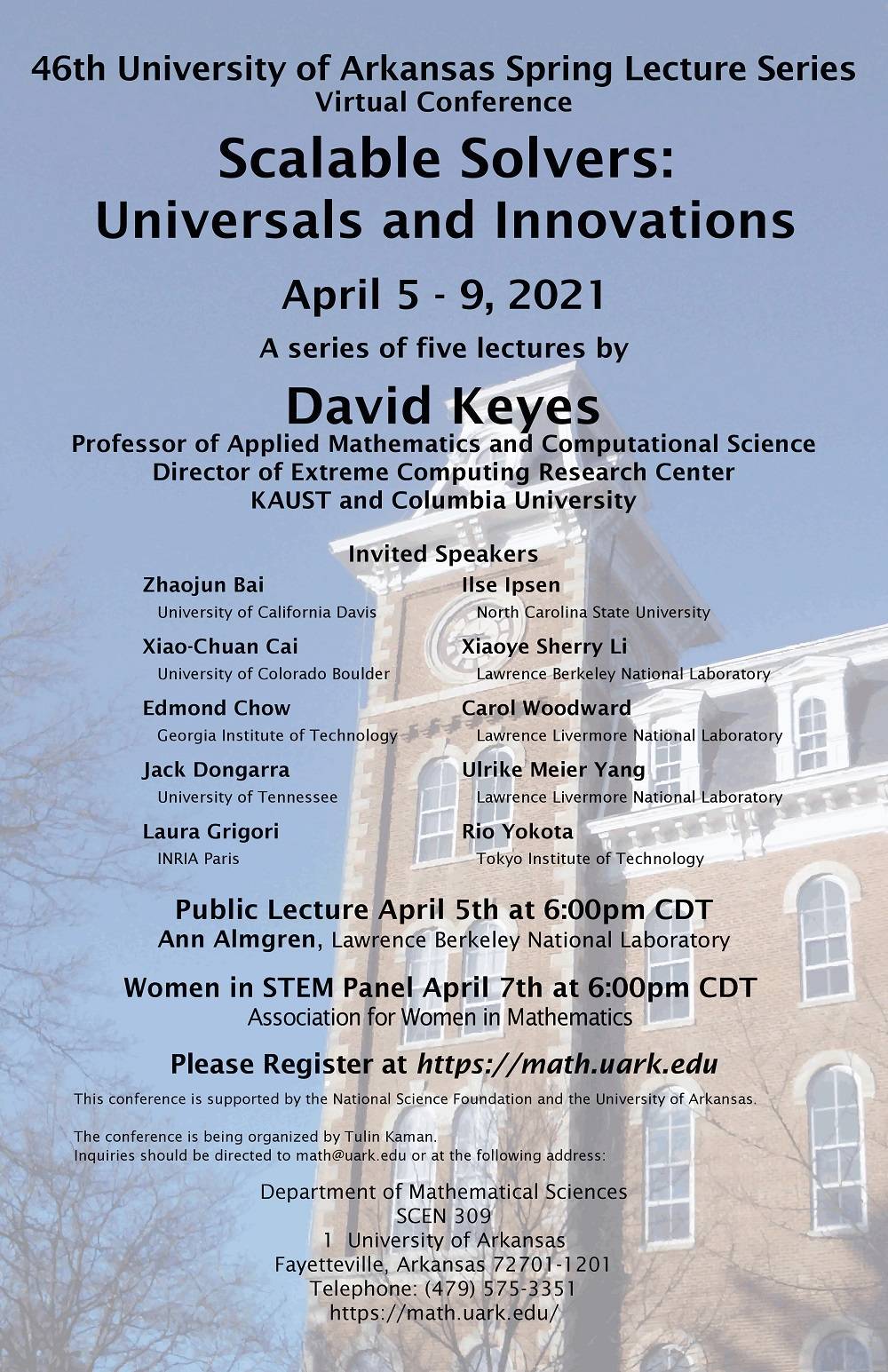

46th Annual Spring Lecture Series

Virtual Event

Scalable Solvers: Universals and Innovations

April 5 - 9, 2021 (9:00am - 12:30pm CDT, opening remarks at 8:45am CDT Monday)

Principal Speaker: David Keyes (Professor of Applied Mathematics and Computational Science; Director of the Extreme Computing Research Center; King Abdullah University of Science and Technology (KAUST) and Columbia University)

Harnessing the Power of Mathematics for High Performance Computing

Harnessing the Power of Mathematics for High Performance Computing

April 5, 2021 (6:00pm - 7:00pm CDT)

Public Lecturer: Ann Almgren (Lawrence Berkeley National Laboratory)

Women in STEM Panel

April 7th, 2021 (6:00pm - 7:00pm CDT)

Association for Women in Mathematics (AWM)

Invited Speakers

Zhaojun Bai (University of California Davis)

Xiao-Chuan Cai (University of Colorado Boulder)

Edmond Chow (Georgia Institute of Technology)

Jack Dongarra (University of Tennessee)

Laura Grigori (INRIA Paris)

Ilse Ipsen (North Carolina State University)

Xiaoye Sherry Li (Lawrence Berkeley National Laboratory)

Carol Woodward (Lawrence Livermore National Laboratory)

Ulrike Meier Yang (Lawrence Livermore National Laboratory)

Rio Yokota (Tokyo Institute of Technology)

Organizer

Tulin Kaman (tkaman@uark.edu)

Schedule of Talks

Monday, April 5th |

Tuesday, April 6th |

Wednesday, April 7th |

Thursday, April 8th |

Friday, April 9th |

| 8:45am Opening Remarks | ||||

| 9:00am David Keyes Lecture Notes [PDF] |

9:00am David Keyes Lecture Notes [PDF] |

9:00am David Keyes Lecture Notes [PDF] |

9:00am David Keyes Lecture Notes [PDF] |

9:00am David Keyes Lecture Notes [PDF] |

| 10:15am Ulrike Yang Lecture Notes [PDF] |

10:15am Laura Grigori |

10:15am Xiao-Chuan Cai Lecture Notes [PDF] |

10:15am Zhaojun Bai Lecture Notes [PDF] |

10:15am Rio Yokota |

| 11:30am Edmond Chow Lecture Notes [PDF] |

11:30am Jack Dongarra Lecture Notes [PDF] |

11:30am Carol Woodward |

11:30am Sherry Li Lecture Notes [PDF] |

11:30am Ilse Ipsen |

| 4:00 pm Graduate Student Workshop Series | ||||

| 6:00pm Ann Almgren Public Lecture |

6:00pm AWM Panel Women in STEM |

|||

Abstracts

Scalable Solvers: Universals and Innovations

Principle Lecturer: David Keyes (King Abdullah University of Science and Technology and Columbia University)

Executive Summary

As simulation and analytics enter the exascale era, numerical algorithms, particularly implicit solvers that couple vast numbers of degrees of freedom, must span a widening gap between ambitious applications and austere architectures to support them. We present fifteen universals for researchers in scalable solvers: imperatives from computer architecture that scalable solvers must respect, strategies towards achieving them that are currently well established, and additional strategies currently being developed for an effective and efficient exascale software ecosystem. We consider recent generalizations of what it means to “solve” a computational problem, which suggest that we have often been “oversolving” them at the smaller scales of the past because we could afford to do so. We present innovations that allow to approach lin-log complexity in storage and operation count in many important algorithmic kernels and thus create an opportunity for full applications with optimal scalability.

Introduction

Linear and nonlinear solvers, singular and eigen value solvers, and optimizers are core algorithms in science, engineering, finance, and data analytics and machine learning in many other fields. They are found in both inner and outer loops of such applications and may dominate the overall computational cost when compared against other computational tasks, such as discretization and adaptation, evaluation of constitutive properties, testing for solution properties or convergence, and pre- or post-processing. Each family of solvers contains numerous member implementations, from classical to contemporary, from direct to iterative, from deterministic to random. While individually easy to describe in terms of mathematical operations on data residing in an idealized fast flat unlimited memory, practical solver software depends upon parameters that are generally difficult to tune due to the hierarchical structure of memory and processor architecture. Choosing the best performing solver is generally an art informed by features of both the application and the architecture of the computer on which it is to be executed.

Scalability, for the purpose of this lecture series, is a functional relation between some “stressor”, such as discrete problem size, and some outcome, such as execution time. Many computational applications arise from the discretization of a continuous problem, e.g., a partial differential equation. A PDE with a solution of sufficient smoothness is increasingly accurately approximated with higher resolution or higher-order discretizations, which expand, respectively, either the total number of unknowns or density of the coupling between the unknowns. The mathematical structure of a discrete problem, such as the spectrum of an operator, is inherited from parameters of the underlying application, such as anisotropy, inhomogeneity, indefiniteness, nonsymmetry, or slow decay of coupling. Computational parameters to consider include the number of processing cores of different types and precisions, capacities of memory at each level of a memory hierarchy, memory latency and bandwidth, and network latency and bandwidth. Two classical scalings, known as “strong” and “weak” relate execution time to processor number and a measure of problem size. “Strong” scaling fixes the problem size and seeks a reduction in time with the addition of resources, while “weak” seeks to bound the time while solve larger problems with more resources. Scalings with respect to “bad parameters” such as coefficient contrast, wavenumber, or the order of a fractional derivative, are of increasing interest in computational science practice.

This lecture series by no means pretends to provide definitive “flowchart-quality” guidance to the combinatorially large problem of selecting algorithms for specific application-architecture combinations, nor does it serve as a comprehensive bibliography for such a large field. Its aim is to place contemporary innovations in the context of “holy grail” quests in various problem domains that are being extended to the very large scales that motivate exascale computing. The era of exascale computing has already begun in the limit of modest floating point precision.1 Given the end of Denard’s “Law” and the slowing of Moore’s “Law,” computational scientists will likely be working their way through the challenges of exascale computing for at least the entire decade of the 2020’s before arriving at the next thousand-fold performance milepost of zettascale.

Since both applications and architectures are primary drivers of algorithmic innovation, we begin by considering their current trends.

Applications are becoming ambitious in many senses: large physical space, phase space or parameter dimensions; resolution of many scales of space and/or time; high fidelity physical modeling (e.g., many components, expensive to compute or to store constitutive properties); isolation from artificial boundary conditions in PDEs; realistic levels of dilutions in particle dynamics; linking together of multiple complex models; placement of “forward problems” inside outer loops for inversion, assimilation, optimization, control, or machine learning; quantification of uncertainty; improvement of statistical estimates; and dynamic adaptation.

Architectures are austere in several senses: chiefly low memory per core, low memory bandwidth per core, and low power per operation. Low power implies using lower numerical precision where possible and many slower cores rather than fewer faster cores. It also puts a premium on building resilience into software rather than into fully reliable hardware, since the latter is usually achieved through space or time redundancy.

Algorithms must increasingly adapt to span the gap between applications that are increasingly ambitious and architectures that are increasingly austere in terms resources on a per-flop/s basis, especially in ways that lead to less uniformity and less predetermined schedulability of operations. Billions of dollars’ worth of open-source scientific software worldwide predicated upon single-program multiple data (SPMD) message-passing programming, representing more than 25 years of successful performance advances, hangs in the balance. Without algorithmic adaption, exascale computers will be limited to petascale performance.

Our “principal lectures” explore 15 universals in scalable solvers:

We begin by understanding five architectural imperatives:

- Reside “high” on the memory hierarchy, as close as possible to the processing elements

- Rely on SIMD/SIMT-style batches of tasks at fine scale

- Reduce synchrony in frequency and/or span

- Reduce communication in number and/or volume of messages

- Exploit heterogeneity in processing, memory, and networking elements

There are five trade-offs between computation, communication, and memory that are already well established, motivated by these imperatives:

- Exploit extra memory to reduce communication volume

- Perform extra flops to require fewer global operations

- Use high-order discretizations to manipulate fewer DOFs (w/more ops per DOF)

- Adapt floating point precision to output accuracy requirements

- Take more resilience into algorithm space, out of hardware/systems space

Then there are five less well-established strategies that are important going forward. These are the primary drivers behind the algorithms we investigate in this lecture series:

- Employ dynamic scheduling capabilities, e.g., dynamic runtime systems based DAGs

- Code to specialized “back-ends” while presenting high-level APIs to general users

- Exploit data sparsity to meet “curse of dimensionality” with “blessing of low rank”

- Process “on the fly” rather than storing all at once (esp. large dense matrices)

- Co-design algorithms with hardware, incl. computing in the network or in memory

The opening principal lecture reviews these fifteen universals with brief background and examples.

The four remaining principal lectures and the guest lectures examine core scientific solvers from the perspective of these universals, illustrating one or several of them in combination in algorithms common in real-world computations. The algorithmic families considered include:

- Direct Methods for Linear Systems

- Iterative Methods for Linear Systems

- Hierarchically Low-rank Linear Algebra

- Eigenvalue and Singular Value Decomposition

- Newton-like methods for Nonlinear Systems

- Nonlinear Preconditioning

- Examples of practical extreme-scale computational problems illustrating such solvers from PDEs, PDE-constrained optimization and spatial statistics

Monday, April 5, 2021

|

8:45am |

Opening Remarks |

|

9:00am |

David Keyes |

|

10:15am |

Ulrike Yang |

|

11:30am |

Edmond Chow |

|

4:00pm |

Graduate Student Workshop Series |

|

6:00pm |

Ann Almgren |

The Impact of Computer Architectures on the Design of Algebraic Multigrid Methods

Ulrike Yang (Lawrence Livermore National Laboratory)

Algebraic multigrid (AMG) is a popular iterative solver and preconditioner for large sparse linear systems. When designed well, it is algorithmically scalable, enabling it to solve increasingly larger systems efficiently. While it consists of various highly parallel building blocks, the original method also consisted of various highly sequential components. A large amount of research has been performed over several decades to design new components that perform well on high performance computers. As a matter of fact, AMG has shown to scale well to more than a million processes. However, with single-core speeds plateauing, future increases in computing performance need to rely on more complicated, often heterogenous computer architectures, which provide new challenges for efficient implementations of AMG. To meet these challenges and yield fast and efficient performance, solvers need to exhibit extreme levels of parallelism, and minimize data movement. In this talk, we will give an overview on how AMG has been impacted by the various architectures of high-performance computers to date and discuss our current efforts to continue to achieve good performance on emerging computer architectures.

Preconditioned Iterative Methods for Linear Systems

Edmond Chow (Georgia Institute of Technology)

Iterative methods for the solution of linear systems of equations – such as stationery, semi-iterative, and Krylov subspace methods – are classical methods taught in numerical analysis courses, but adapting these methods to run efficiently at large-scale on high-performance computers is challenging and a constantly evolving topic. Preconditioners – necessary to aid the convergence of iterative methods – come in many forms, from algebraic to physics-based, are regularly being developed for linear systems from different classes of problems, and similarly are evolving with high-performance computers. This lecture will cover the background and some recent developments on iterative methods and preconditioning in the context of high-performance parallel computers. Topics include asynchronous iterative methods that avoid the potentially high synchronization cost where there are very large numbers of computational threads, parallel sparse approximate inverse preconditioners, parallel incomplete factorization preconditioners and sparse triangular solvers, and preconditioning with hierarchical rank-structured matrices for kernel matrix equations.

Harnessing the Power of Mathematics for High Performance Computing

Public Lecturer: Ann Almgren (Lawrence Livermore National Lab)

Mathematics has always played a critical role in scientific discovery through computing. The increasing power and heterogeneity of modern computer architectures provide the opportunity to understand physical systems with higher and higher fidelity. But as the range of length and time scales we want to probe becomes ever greater and the number of different types of physical processes we need to model becomes larger and more varied, the challenge of building effective simulation methodologies grows along with the opportunities. Mathematics provides the key to meeting this challenge. It allows us to decompose complex problems into their core elements and express how these elements are coupled. It allows us to abstract physical processes into equations and design algorithms to solve them. In addition, casting problems in the language of mathematics exposes the commonality between diverse applications. Exploiting this commonality enables us to capture complex algorithms in efficient high-performance software that provides building blocks for new algorithmic exploration and scientific discovery.

Tuesday, April 6, 2021

|

9:00am |

David Keyes |

|

10:15am |

Laura Grigori |

|

11:30am |

Jack Dongarra |

|

4:00pm |

Graduate Student Workshop Series |

Recent Advances in Solving Large Systems of Linear Equations

Laura Grigori (Institut National de Recherche en Informatique et an Automatique (INRIA))

This talk will focus on recent advances in solving large linear systems of equations by using multilevel domain decomposition techniques and randomization. We describe a multilevel technique based on Geneo that allows to bound the condition number of the preconditioned matrix and thus leads to a fast and robust convergence of an iterative method. We then discuss randomization techniques for solving linear systems of equations by using GMRES. These techniques are based on parallel sketching and a randomized Gram-Schmidt orthogonalization process.

This is joint work with H. Al Daas, O. Balabanov, M. Beaupère, P. Jolivet, P.-H. Tournier.

The Road to Exascale and Legacy Software for Dense Linear Algebra

Jack Dongarra (University of Tennessee, Oak Ridge National Laboratory, and University of Manchester)

In this talk, we will look at the current state of high performance computing and look at the next stage of extreme computing. With extreme computing, there will be fundamental changes in the character of floating point arithmetic and data movement. In this talk, we will look at how extreme-scale computing has caused algorithm and software developers to change their way of thinking on implementing and program-specific applications.

Wednesday, April 7, 2021

|

9:00am |

David Keyes |

|

10:15am |

Xiao-Chuan Cai |

|

11:30am |

Carol Woodward |

|

4:00pm |

Graduate Student Workshop Series |

|

6:00pm |

Women in STEM Panel |

Nonlinear Preconditioning Methods and Applications

Xiao-Chuan Cai (University of Macau and University of Colorado-Boulder)

We consider solving system of nonlinear algebraic equations arising from the discretization of partial differential equations. Inexact Newton is a popular technique for such problems. When the nonlinearities in the system are well-balanced, Newton's method works well, but when a small number of nonlinear functions in the system are much more nonlinear than the others, Newton may converge slowly or even stagnate. In such a situation, we introduce some nonlinear preconditioners to balance the nonlinearities in the system. The preconditioners are often constructed using a combination of some domain decomposition methods and nonlinear elimination methods. For the nonlinearly preconditioned problem, we show that fast convergence can be restored. In this talk we first review the basic algorithms, and then discuss some recent progress in the applications of nonlinear preconditioners for some difficult problems arising in computational mechanics including both fluid dynamics and solid mechanics.

Recent Advances in Time Integration Methods and How They Can Enable Exascale Simulations

Carol Woodward (Lawrence Livermore National Laboratory)

To prepare for exascale systems, scientific simulations are growing in physical realism and thus complexity. This increase often results in additional and changing time scales. Time integration methods are critical to efficient solution of these multiphysics systems. Yet, many large-scale applications have not fully embraced modern time integration methods nor efficient software implementations. Hence, achieving temporal accuracy with new and complex simulations has proved challenging. We will overview recent advances in time integration methods, including additive IMEX methods, multirate methods, and parallel-in-time approaches, expected to help realize the potential of exascale systems on multiphysics simulations. Efficient execution of these methods relies, in turn, on efficient algebraic solvers, and we will discuss the relationships between integrators and solvers. In addition, an effective time integration approach is not complete without efficient software, and we will discuss effective software design approaches for time integrators and their uses in application codes. Lastly, examples demonstrating some of these new methods and their implementations will be presented.

This work was performed under the auspices of the U.S. Department of Energy by Lawrence Livermore National Laboratory under Contract DE-AC52-07NA27344. LLNL-ABS- 819501.

Thursday, April 8, 2021

|

9:00am |

David Keyes |

|

10:15am |

Zhaojun Bai |

|

11:30am |

Sherry Li |

|

4:00pm |

Graduate Student Workshop Series |

Thick-Restart Lanczos with Explicit External Deflation for Computing Many Eigenpairs and its Communication-Avoiding Variant

Zhaojun Bai (University of California-Davis)

There are continual and compelling needs for computing many eigenpairs of very large Hermitian matrix in physical simulations and data analysis. Though the Lanczos method is effective for computing a few eigenvalues, it can be expensive for computing a large number of eigenvalues. To improve the performance of the Lanczos method, in this talk, we will present a combination of explicit external deflation (EED) with an s-step variant of thick-restart Lanczos (s-step TRLan). The s-step Lanczos method can achieve an order of s reduction in data movement while the EED enables to compute eigenpairs in batches along with a number of other advantages.

A low-rank factorization framework for building scalable algebraic solvers and preconditioners

Sherry Li (Lawrence Berkeley National Laboratory)

Factorization based preconditioning algorithms, most notably incomplete LU (ILU) factorization,

have been shown to be robust and applicable to wide ranges of problems. However, traditional

ILU algorithms are not amenable to scalable implementation. In recent years, we have

seen a lot of investigations using low-rank compression techniques to build approximate

factorizations.

A key to achieving lower complexity is the use of hierarchical matrix algebra, stemming

from the H-matrix research. In addition, the multilevel algorithm paradigm provides

a good vehicle for a scalable implementation. The goal of this lecture is to give

an overview of the various hierarchical matrix formats, such as hierarchically semi-separable

matrix (HSS), hierarchically off-diagonal low-rank matrix (HODLR) and Butterfly matrix,

and explain the algorithm differences and approximation quality. We will illustrate

many practical issues of these algorithms using our parallel libraries STRUMPACK and

ButterflyPACK, and demonstrate their effectiveness and scalability while solving the

very challenging problems, such as high frequency wave equations.

Friday, April 9, 2021

|

9:00am |

David Keyes |

|

10:15am |

Rio Yokota |

|

11:30am |

Ilse Ipsen |

|

4:00pm |

Graduate Student Workshop Series |

Hierarchically Low Rank and Kroenecker Methods

Rio Yokota (Tokyo Institute of Technology)

Exploiting structures of matrices goes beyond identifying their non-zero patterns. In many cases, dense full-rank matrices have low-rank submatrices that can be exploited to construct fast approximate algorithms. In other cases, dense matrices can be decomposed into Kronecker factors that are much smaller than the original matrix. Sparsity is a consequence of the connectivity of the underlying geometry (mesh, graph, interaction list, etc.), whereas the rank-deficiency of submatrices is closely related to the distance within this underlying geometry. For high dimensional geometry encountered in data science applications, the curse of dimensionality poses a challenge for rank-structured approaches. On the other hand, models in data science that are formulated as a composition of functions, lead to a Kronecker product structure that yields a different kind of fast algorithm. In this lecture, we will look at some examples of when rank structure and Kronecker structure can be useful.

Randomized Algorithms for Least Squares Problems

Ilse Ipsen (North Carolina State University)

The emergence of massive data sets, over the past twenty or so years, has lead to the development of Randomized Numerical Linear Algebra. Randomized matrix algorithms perform random sketching and sampling of rows or columns, in order to reduce the problem dimension or compute low-rank approximations. We review randomized algorithms for the solution of least squares/regression problems, based on row sketching from the left, or column sketching from the right. These algorithms tend to be efficient and accurate on matrices that have many more rows than columns. We present probabilistic bounds for the amount of sampling required to achieve a user-specified error tolerance. Along the way we illustrate important concepts from numerical analysis (conditioning and pre-conditioning), probability (coherence, concentration inequalities), and statistics (sampling and leverage scores). Numerical experiments illustrate that the bounds are informative even for small problem dimensions and stringent success probabilities. To stress-test the bounds, we present algorithms that generate 'adversarial' matrices' for user-specified coherence and leverage scores. If time permits, we discuss the additional effect of uncertainties from the underlying Gaussian linear model in a regression problem.

Ann Almgren

Ann Almgren Ilse Ispen

Ilse Ispen Xiaoye Sherry Li

Xiaoye Sherry Li Carol Woodward

Carol Woodward Ulrike Meier Yang

Ulrike Meier Yang David Keyes directs the Extreme Computing Research Center at the King Abdullah University of

Science and Technology (KAUST), where he was the founding Dean of the Division of

Mathematical and Computer Sciences and Engineering in 2009 and currently serves in

the Office of the President as Senior Associate for strategic priorities and institutional

partnerships. He is also an Adjunct Professor in Applied Mathematics at Columbia University,

where he formerly held the Fu Foundation Chair. He works at the interface between

parallel computing and partial differential equations and statistics, with a current

focus on scalable algorithms that exploit data sparsity. Before joining KAUST, Keyes

led multi-institutional scalable solver software projects in the SciDAC and ASCI programs

of the US Department of Energy (DoE), ran university collaboration programs at US

DoE and NASA institutes, and taught at Columbia, Old Dominion, and Yale Universities. He

is a Fellow of SIAM, AMS, and AAAS, and has been awarded the ACM Gordon Bell Prize,

the IEEE Sidney Fernbach Award, and the SIAM Prize for Distinguished Service to the

Profession. He earned a B.S.E. in Aerospace and Mechanical Sciences from Princeton

in 1978 and a Ph.D. in Applied Mathematics from Harvard in 1984.

David Keyes directs the Extreme Computing Research Center at the King Abdullah University of

Science and Technology (KAUST), where he was the founding Dean of the Division of

Mathematical and Computer Sciences and Engineering in 2009 and currently serves in

the Office of the President as Senior Associate for strategic priorities and institutional

partnerships. He is also an Adjunct Professor in Applied Mathematics at Columbia University,

where he formerly held the Fu Foundation Chair. He works at the interface between

parallel computing and partial differential equations and statistics, with a current

focus on scalable algorithms that exploit data sparsity. Before joining KAUST, Keyes

led multi-institutional scalable solver software projects in the SciDAC and ASCI programs

of the US Department of Energy (DoE), ran university collaboration programs at US

DoE and NASA institutes, and taught at Columbia, Old Dominion, and Yale Universities. He

is a Fellow of SIAM, AMS, and AAAS, and has been awarded the ACM Gordon Bell Prize,

the IEEE Sidney Fernbach Award, and the SIAM Prize for Distinguished Service to the

Profession. He earned a B.S.E. in Aerospace and Mechanical Sciences from Princeton

in 1978 and a Ph.D. in Applied Mathematics from Harvard in 1984.

Edmond Chow is an Associate Professor in the School of Computational Science and Engineering

at Georgia Institute of Technology. He previously held positions at D. E. Shaw Research

and Lawrence Livermore National Laboratory. His research is in developing and applying

numerical methods and high-performance computing to solve large-scale scientific computing

problems and seeks to enable scientists and engineers to solve larger problems more

efficiently using physical simulation. Specific interests include numerical linear

algebra (preconditioning, multilevel methods, sparse matrix computations, rank-structured

matrices) and parallel methods for quantum chemistry, molecular dynamics, and Brownian/Stokesian

dynamics. Dr. Chow earned an Honors B.A.Sc. in Systems Design Engineering from the

University of Waterloo, Canada, in 1993, and a Ph.D. in Computer Science with a minor

in Aerospace Engineering from the University of Minnesota in 1997. Dr. Chow was awarded

the 2009 ACM Gordon Bell prize and the 2002 Presidential Early Career Award for Scientists

and Engineers (PECASE).

Edmond Chow is an Associate Professor in the School of Computational Science and Engineering

at Georgia Institute of Technology. He previously held positions at D. E. Shaw Research

and Lawrence Livermore National Laboratory. His research is in developing and applying

numerical methods and high-performance computing to solve large-scale scientific computing

problems and seeks to enable scientists and engineers to solve larger problems more

efficiently using physical simulation. Specific interests include numerical linear

algebra (preconditioning, multilevel methods, sparse matrix computations, rank-structured

matrices) and parallel methods for quantum chemistry, molecular dynamics, and Brownian/Stokesian

dynamics. Dr. Chow earned an Honors B.A.Sc. in Systems Design Engineering from the

University of Waterloo, Canada, in 1993, and a Ph.D. in Computer Science with a minor

in Aerospace Engineering from the University of Minnesota in 1997. Dr. Chow was awarded

the 2009 ACM Gordon Bell prize and the 2002 Presidential Early Career Award for Scientists

and Engineers (PECASE).

Laura Grigori is a senior research scientist at INRIA in France, where she is leading Alpines group,

a joint group between INRIA and J.L. Lions Laboratory, Sorbonne University, in Paris.

She is vice-chair of PRACE Scientific Steering Committee, a member of SIAM Council

since January 2018, the recipient of an ERC Synergy Grant, and a SIAM Fellow. Her

field of expertise is in numerical linear and multilinear algebra, and high performance

scientific computing. She co-authored in 2008 papers introducing communication-avoiding

algorithms that provably minimize communication, for which she was awarded with her

co-authors the SIAM SIAG on Supercomputing Best Paper Prize 2016 for the most outstanding

paper published in 2012-2015 in a journal in the field of high performance computing.

She served as the Program Director of the SIAM Activity Group on Supercomputing, January

2014 - December 2015, then the Chair of this interest group, January 2016 - December

2017.

Laura Grigori is a senior research scientist at INRIA in France, where she is leading Alpines group,

a joint group between INRIA and J.L. Lions Laboratory, Sorbonne University, in Paris.

She is vice-chair of PRACE Scientific Steering Committee, a member of SIAM Council

since January 2018, the recipient of an ERC Synergy Grant, and a SIAM Fellow. Her

field of expertise is in numerical linear and multilinear algebra, and high performance

scientific computing. She co-authored in 2008 papers introducing communication-avoiding

algorithms that provably minimize communication, for which she was awarded with her

co-authors the SIAM SIAG on Supercomputing Best Paper Prize 2016 for the most outstanding

paper published in 2012-2015 in a journal in the field of high performance computing.

She served as the Program Director of the SIAM Activity Group on Supercomputing, January

2014 - December 2015, then the Chair of this interest group, January 2016 - December

2017.

X.-C. Cai's research interests are in the general area of scientific and engineering computing

including parallel algorithms and high performance software for linear and nonlinear

partial differential equations, domain decomposition methods, multigrid methods, numerical

linear algebra, PDE-constrained optimization, inverse problems, stochastic partial

differential equations, computational fluid dynamics, fluid-structure interactions,

computational biomechanics, and parallel processing. He received his BSc degree in

1984 from Peking University, and his MSc in 1988 and PhD in 1989 from the Courant

Institute, New York University. He was a postdoc during 1989-1990 in the Department

of Computer Science, Yale University. During 1990-1993, he held the position of an

Assistant Professor in the Department of Mathematics, University of Kentucky. Since

1993, he has been a faculty member in the Department of Computer Science, University

of Colorado Boulder, and the positions he held include Assistant Professor (1993-1997),

Associate Professor (1997-2004), Professor (2004-present), Director of Graduate Studies

(2002-2007) and Department Chair (2007-2011). He is also an affiliated faculty member

in the Department of Applied Mathematics, University of Colorado Boulder (1993-present).

He is a SIAM Fellow.

X.-C. Cai's research interests are in the general area of scientific and engineering computing

including parallel algorithms and high performance software for linear and nonlinear

partial differential equations, domain decomposition methods, multigrid methods, numerical

linear algebra, PDE-constrained optimization, inverse problems, stochastic partial

differential equations, computational fluid dynamics, fluid-structure interactions,

computational biomechanics, and parallel processing. He received his BSc degree in

1984 from Peking University, and his MSc in 1988 and PhD in 1989 from the Courant

Institute, New York University. He was a postdoc during 1989-1990 in the Department

of Computer Science, Yale University. During 1990-1993, he held the position of an

Assistant Professor in the Department of Mathematics, University of Kentucky. Since

1993, he has been a faculty member in the Department of Computer Science, University

of Colorado Boulder, and the positions he held include Assistant Professor (1993-1997),

Associate Professor (1997-2004), Professor (2004-present), Director of Graduate Studies

(2002-2007) and Department Chair (2007-2011). He is also an affiliated faculty member

in the Department of Applied Mathematics, University of Colorado Boulder (1993-present).

He is a SIAM Fellow.

Zhaojun Bai is a Distinguished Professor in the Department of Computer Science and Department

of Mathematics, University of California, Davis, and a Faculty Computer Scientist

at Lawrence Berkeley National Laboratory. He obtained his PhD from Fudan University,

China and had a postdoctoral fellowship at the Courant Institute, New York University.

His main research interests include linear algebra algorithm design and analysis,

mathematical software engineering and applications in computational science and engineering,

and data science. He participated in a number of synergistic projects, such as LAPACK.

He is a co-Editor-in-Chief of ACM TOMS, and serves on editorial boards of JCM and

Science China Mathematics among others. Previously, he served as an associate editor

of SIMAX, vice chair of IEEE IPDPS, and numerous other professional positions. He

is a Fellow of SIAM.

Zhaojun Bai is a Distinguished Professor in the Department of Computer Science and Department

of Mathematics, University of California, Davis, and a Faculty Computer Scientist

at Lawrence Berkeley National Laboratory. He obtained his PhD from Fudan University,

China and had a postdoctoral fellowship at the Courant Institute, New York University.

His main research interests include linear algebra algorithm design and analysis,

mathematical software engineering and applications in computational science and engineering,

and data science. He participated in a number of synergistic projects, such as LAPACK.

He is a co-Editor-in-Chief of ACM TOMS, and serves on editorial boards of JCM and

Science China Mathematics among others. Previously, he served as an associate editor

of SIMAX, vice chair of IEEE IPDPS, and numerous other professional positions. He

is a Fellow of SIAM.

Rio Yokota is an Associate Professor at the Global Scientific Information and Computing Center

at the Tokyo Institute of Technology. His research interests lie at the intersection

of high performance computing, linear algebra, and machine learning. He is the developer

numerous libraries for fast multipole methods (ExaFMM), hierarchical low-rank algorithms

(HICMA), and Kronecker-factored approximation (K-FAC) that scale to the full system

on the largest supercomputers today. He has been optimizing these algorithms on GPUs

since 2006, and was part of a team that received the Gordon Bell prize in 2009 using

the first GPU supercomputer.

Rio Yokota is an Associate Professor at the Global Scientific Information and Computing Center

at the Tokyo Institute of Technology. His research interests lie at the intersection

of high performance computing, linear algebra, and machine learning. He is the developer

numerous libraries for fast multipole methods (ExaFMM), hierarchical low-rank algorithms

(HICMA), and Kronecker-factored approximation (K-FAC) that scale to the full system

on the largest supercomputers today. He has been optimizing these algorithms on GPUs

since 2006, and was part of a team that received the Gordon Bell prize in 2009 using

the first GPU supercomputer.

Ilse Ipsen received a Bachelor's degree from the University of Kaiserslautern in Germany and

a Ph.D. from Penn State, both in Computer Science. She taught Computer Science at

Yale for 10 years, and is now a Professor of Mathematics at NCState, with affiliate

appointments in Statistics and the Institute for Advanced Analytics. Her research

interests include numerical linear algebra, randomized algorithms, and probabilistic

numerics; and she has authored a graduate text book Numerical Matrix Analysis. Ilse Ipsen is the founding Editor-in-Chief of the SIAM Book Series on Data Science

and serves on the editorial boards of SIAM Review, Numerische Mathematik, and Acta

Numerica. She has been SIAM Vice-President for Programs, SIAM Vice-President at Large,

and Associate Director of SAMSI. She is a Fellow of the AAAS and SIAM.

Ilse Ipsen received a Bachelor's degree from the University of Kaiserslautern in Germany and

a Ph.D. from Penn State, both in Computer Science. She taught Computer Science at

Yale for 10 years, and is now a Professor of Mathematics at NCState, with affiliate

appointments in Statistics and the Institute for Advanced Analytics. Her research

interests include numerical linear algebra, randomized algorithms, and probabilistic

numerics; and she has authored a graduate text book Numerical Matrix Analysis. Ilse Ipsen is the founding Editor-in-Chief of the SIAM Book Series on Data Science

and serves on the editorial boards of SIAM Review, Numerische Mathematik, and Acta

Numerica. She has been SIAM Vice-President for Programs, SIAM Vice-President at Large,

and Associate Director of SAMSI. She is a Fellow of the AAAS and SIAM.