Department of Mathematical Sciences

850 West Dickson Street, Room 309

University of Arkansas

Fayetteville, AR 72701

P 479-575-3351

F 479-575-8630

E-mail: math@uark.edu

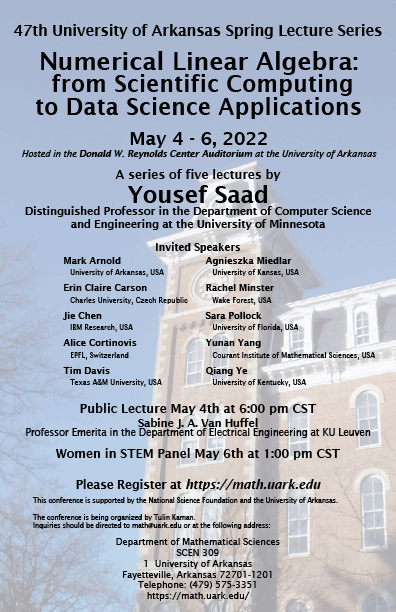

47th Spring Lecture Series

Hybrid Conference: 47th Annual Spring Lecture Series

Numerical Linear Algebra: from Scientific Computing to Data Science Applications

May 4 - 6, 2022 (7:30 am - 6:00 pm CST)

Principal Speaker: Yousef Saad

Distinguished Professor in the Department of Computer Science and Engineering at the University of Minnesota Public Lecture | Challenges in Smart Patient Monitoring: from Raw Data to Decision

Support

Public Lecture | Challenges in Smart Patient Monitoring: from Raw Data to Decision

Support

May 4, 2022 (6:00 pm CST)

Public Lecturer: Sabine Van Huffel

Professor Emerita in the Department of Electrical Engineering at KU LeuvenWomen in STEM Panel

May 6, 2022 (1:00pm CST)

Association for Women in Mathematics (AWM)

Invited Speakers

Mark Arnold (University of Arkansas, USA)

Erin Claire Carson (Charles University, Czech Republic)

Jie Chen (IBM Research, USA)

Alice Cortinovis (EPFL, Switzerland)

Tim Davis (Texas A&M University, USA)

Agnieszka Międlar (University of Kansas, USA)

Rachel Minster (Wake Forest, USA)

James Nagy (Emory University, USA)

Sara Pollock (University of Florida, USA)

Qiang Ye (University of Kentucky, USA)

Organizer

Tulin Kaman (tkaman@uark.edu)

Assistant Professor, Lawrence Jesser Toll Jr. Endowed Chair, Department of Mathematical Sciences, University of ArkansasSchedule of Talks, Video Lectures, and Slides

Conference Location will be in the Donald W. Reynolds Center Auditorium

Wednesday, May 4th |

Thursday, May 5th

|

Friday, May 6th

|

| 7:15am Registration - Coffee/Tea | 7:15am Coffee/Tea | |

| 7:45am Opening Remarks | ||

| 8:00am Yousef Saad [Video] [Slides] Lecture #1 |

8:00am Yousef Saad [Video] [Slides] Lecture #3 |

8:00am Yousef Saad [Video] [Slides] Lecture #5 |

| Break | ||

| 9:30am Tim Davis [Video] [Slides] | 9:30am Sara Pollock [Video] [Slides] | 9:30am Rachel Minster [Video] [Slides] |

| Break | ||

| 10:45am Erin Claire Carson [Video] [Slides] | 10:45am Agnieszka Międlar [Video] [Slides] | 10:45am Mark Arnold [Video] [Slides] |

| Lunch | ||

| 1:00pm Yousef Saad [Video] [Slides] Lecture #2 |

1:00pm Yousef Saad [Video] [Slides] Lecture #4 |

1:00pm Women in STEM Panel |

| Break | ||

| 2:30pm James Nagy [Video] [Slides] | 2:30pm Jie Chen [Video] [Slides] | 2:15pm Contributed Talks Session |

| Break | 3:45pm Closing Remarks | |

| 3:45pm Alice Cortinovis [Video] [Slides] | 3:45pm Qiang Ye [Video] |

|

| 4:45pm Poster Session w/ Reception | 4:45pm Boarding Shuttle from Reynolds Center to Crystal Bridges |

|

| 6:00pm Sabine Van Huffel [Video] [Slides] Public Lecture |

6:00pm Banquet @ Crystal Bridges |

|

Abstracts

Numerical Linear Algebra: from Scientific Computing to Data Science Applications

Principle Lecturer: Yousef Saad (Distinguished Professor in the Department of Computer Science and Engineering at the University of Minnesota)

Abstract

Numerical linear algebra is at the core of virtually every field of science and engineering, whether in solving linear systems that arise from simulations of physical phenomena, or in obtaining various solutions of optimization problems in data related applications. As the world around us is progressively being analyzed or modeled with the help of available data, the types of computational problems encountered are changing, and as a result the field is currently undergoing a deep transformation. This set of 5 lectures presents an overview of the methodologies that are used in both the scientific computing and the data science disciplines. In an effort to build a bridge between these two disciplines, one of the goals of the lectures is to introduce data science techniques to nonspecialists in scientific computing.

Summary of Lectures

Numerical linear algebra is at the core of virtually every field of science and engineering, whether in solving linear systems that arise from simulations of physical phenomena, or in obtaining various solutions of optimization problems in data related applications. As the world around is progressively being analyzed or modeled with the help of available data, the types of computational problems encountered are changing, and as a result the field is currently undergoing a deep transformation. For the specialist in numerical methods this means that existing methods must be adapted to the new demands and that new ones must also be developed to cope with the emerging paradigm.

This set of 5 lectures will present the fundamental ideas of numerical linear algebra with an emphasis on those methods that have the potential to play an important role in data science, e.g., eigenvalue and singular value problems, sparse matrix techniques, graph based methods, Krylov subspaces, and preconditioning. Part of the lectures will be devoted to specific problems of data sciences, covering applications of graph-based methods, randomization methods, Network analysis, dimension reduction, and neural networks among other themes. The lectures will frequently be illustrated by live Matlab (or Python) demonstrations.

Lecture #1

The first lecture will begin with a brief historical perspective in an attempt to explain what drives innovation in matrix computations. A major problem in the era of Gauss (early 1800s), was to solve linear systems related to the normal equations for the purpose of calculating orbits of celestial objects. The first part of the 1900s saw an increased interest in solving discretized partial differential equations for dealing with various simulations, e.g., weather forecasting. Later in 1950s at the height of the cold war, the eigenvalue problem generated a wave of interest in the linear algebra community, as it was key to solving the 'flutter' problem in aircrafts. There is no doubt that the emergence of data related applications and machine learning in particular, is ushering in a new era, initiating a turning point of similar magnitude to those turns taken in the past. This first lecture we will also provide a synopsis of problems encountered in matrix computations, ranging from the ones seen in optimization and control to those related to solving PDEs. Finally, the lecture will cover some background in matrix theory and in sparse matrix techniques.

Lecture #2

The second lecture will present projection methods and Krylov subspace techniques. Here, it is important to take a relatively formal view because the idea of projection method is rather powerful as well as widespread. Thus, the Galerkin approach or the Rayleigh-Ritz projection technique can be applied in finite as well as infinite dimensional spaces and can be applied to spaces of polynomials, or vectors, or classes of functions. Among these, Krylov subspace methods occupy a distinct place in numerical linear algebra. They will be described for linear systems as well as for eigenvalue problems, and briefly for nonlinear systems of equations.

Lecture #3

The third lecture will cover sparse matrix techniques and graphs. A sparse matrix is a matrix whose entries are mostly zeros. Such matrices play a major role in applications ranging from discretized partial differential equations to the analysis of text data. This lecture will introduce sparse matrices, discuss how they are stored, and their relations with graphs. Graph representations play a key role in understanding certain types of algorithms for sparse matrices. In particular we will see how graphs can be used to represent data by means of nearest-neighbor techniques or via kernels. This lecture will also discuss sparse direct methods for solving linear systems, Preconditioning techniques, and Domain Decomposition ideas.

Lecture #4

The fourth lecture will continue on with graphs and discuss methods for graph and network analysis. We will describe the intimate relation between graphs and data, and the Graph representation of data, and similarity graphs. Graph Laplaceans play a major role in various applications, so we give an overview of their properties and then see how they have been used in applications such as clustering and various measures of network analysis. We will also discuss graph partitioning and coarsening as these are rather common in scientific computing. A key ingredient utilized in both scientific computing and in data science is that of graph coarsening. Given a large graph, the goal of graph coarsening is to find a graph of much smaller size that is a faithful representative of the original graph. When analyzing networks, various measures of 'communicability' are used. We will take a particular look at the Estrada index. This particular topic generated a big interest in the problem of estimating bilinear forms. We will also provide an overview of numerical linear algebra and Krylov subspace methods for Network analysis.

Lecture #5

Lecture 5 will take us to 'dimension reduction methods', an area that straddles numerical linear algebra, machine learning, and statistical analysis. An important application of dimension reduction is that of graph embeddings which consists of representing graphs with vectors. This lecture will also discuss projection methods from the angle of dimension reduction. It will review randomization and sketching and graph based methods. Other topics to be covered include: Linear Discriminant Analysis, Support Vector Machines, and applications such as: Segmentation; Face recognition; digit recognition. Finally we will give an overview of Neural Networks: Basics of neural networks; Deep learning; Convolutional Neural Networks; The problem of back propagation; Graph Neural Networks and the use of embeddings. Numerical methods: Stochastic gradient descent.

Wednesday, May 4, 2022

|

8:00am

|

Yousef Saad #1

|

|

9:30am

|

Tim Davis

|

|

10:45am

|

Erin Claire Carson

|

|

1:00pm

|

Yousef Saad #2

|

|

2:30pm

|

James Nagy

|

|

3:45pm

|

Alice Cortinovis

|

|

4:45pm

|

Poster Session

|

|

6:00pm

|

Public Lecture

|

SuiteSparse:GraphBLAS: Graph Algorithms in the Language of Sparse Linear Algebra

Tim Davis (Texas A&M University, USA)

SuiteSparse:GraphBLAS is a full implementation of the GraphBLAS standard, which defines a set of sparse matrix operations on an extended algebra of semirings using an almost unlimited variety of operators and types. When applied to sparse adjacency matrices, these algebraic operations are equivalent to computations on graphs. GraphBLAS provides a powerful and expressive framework for creating graph algorithms based on the elegant mathematics of sparse matrix operations on a semiring. Key features and performance of the SuiteSparse implementation of GraphBLAS package are described. The implementation appears in Linux distros, forms the basis of the RedisGraph module of Redis (a commercial graph database system), and appears as C=A*B in MATLAB. Graph algorithms written in GraphBLAS can rival the performance of highly-tuned specialized kernels, while being far simpler for the end user to write.

Exploiting Mixed Precision in Numerical Linear Algebra

Erin Claire Carson (Charles University, Czech Republic)

Support for floating point arithmetic in multiple precisions is becoming increasingly common in emerging architectures. Mixed precision capabilities are already included in many machines on the TOP500 list and are expected to be a crucial hardware feature in coming exascale machines. From a computational scientist's perspective, our goal is to determine how and where we can exploit mixed precision computation in our codes. This requires both an understanding of performance characteristics as well as an understanding of the numerical behavior of algorithms in finite precision arithmetic. After introducing floating point computation, mixed precision hardware, and current work in mixed precision numerical linear algebra, we present examples that demonstrate what can go wrong if we use low precision blindly. This motivates the need for rigorous rounding error analysis in algorithms used in scientific computing and data science applications. Understanding the behavior of algorithms in finite precision is necessary not only for illuminating potential dangers, but also for revealing opportunities. As an example of where rounding error analysis can lead to new insights and improved algorithms, we present a general algorithm for solving linear systems based on mixed-precision iterative refinement. From this, we develop a mixed-precision GMRES-based iterative refinement scheme that works for even ill-conditioned systems. We discuss performance results on modern GPU architectures and the HPL-AI benchmark, which is based on these mixed precision iterative refinement algorithms. The world's top supercomputers already exceed exaflop performance on HPL-AI, achieving over 4x higher performance than on the standard HPL benchmark.

Flexible Krylov Subspace Regularization for Inverse Problems

James Nagy (Emory University, USA)

Inverse problems arise in a variety of applications: image processing, machine learning, finance, mathematical biology, and more. Mathematical models for these applications may involve integral equations, partial differential equations, and dynamical systems, and solution schemes are formulated by applying algorithms that incorporate regularization techniques and/or statistical approaches. In most cases these solutions schemes involve the need to solve a large-scale ill-conditioned linear system that is corrupted by noise and other errors. In this talk we describe Krylov subspace-based regularization approaches to solve these linear systems that exploit direct matrix factorization methods on small subproblems to choose regularization parameters and variable preconditioning to enforce certain regularization, such as sparsity and low-rank. The methods are very efficient for large scale inverse problems, they have the advantage that various regularization approaches can be used, and they can also incorporate methods to automatically estimate regularization parameters.

Randomized Trace Estimation and Determinants

Alice Cortinovis (EPFL, Switzerland)

Computing the determinant of a large-scale symmetric positive definite matrix A is a task that arises in many applications, for example in the training of a Gaussian process regression model. When the matrix is sufficiently small, its determinant can be computed via a Cholesky decomposition of A. When A is large and this approach is too costly, one can still get an approximation of the determinant by estimating the trace of a suitable matrix, that is, the matrix logarithm log(A), using randomized algorithms. In this lecture we consider the Hutchinson trace estimator, which obtains an approximation to the trace of a matrix B by averaging some quadratic forms involving B and random vectors following a suitable distribution. In the context of determinants, the advantage of this approach is that quadratic forms involving log(A) can be approximated efficiently using Lanczos method. We discuss convergence bounds for the Hutchinson trace estimator, focusing on the case in which B is a symmetric but indefinite matrix, and apply the bounds to the approximation of the determinant.

Thursday, May 5, 2022

|

8:00am

|

Yousef Saad #3

|

|

9:30am

|

Sara Pollock

|

|

10:45am

|

Agnieszka Międlar

|

|

1:00pm

|

Yousef Saad #4

|

|

2:30pm

|

Jie Chen

|

|

3:45pm

|

Qiang Ye

|

|

5:00pm

|

Excursion @ Crystal Bridges

|

|

6:00pm

|

Banquet @ Crystal Bridges

|

Filtered Anderson Acceleration for Nonlinear PDEs

Sara Pollock (University of Florida, USA)

Anderson acceleration (AA) is a popular extrapolation technique used to accelerate the convergence of fixed-point iterations. It requires the storage of a (usually) small number of solution and update vectors, and the solution of an optimization problem that is generally posed as least-squares and solved efficiently by a thin QR decomposition. First developed in 1965 in the context of integral equations, this method has recently been increasing in popularity as a Jacobian-free approach to converging to discrete nonlinear PDE solutions, not to mention applications in optimization and machine learning. The convergence behavior of AA is still not fully understood, and its dependence on the selection of parameters including the algorithmic depth remains an active field of research. In this talk we will discuss understanding and improving the behavior of the algorithm using standard tools and techniques from numerical linear algebra. We will also numerically demonstrate how the filtering and dynamic depth selection procedures suggested by the recent theory can be used to improve both the efficiency and robustness of accelerated solves.

Challenges for Eigenvalue Computations in Breakthrough Applications

Agnieszka Międlar (University of Kansas, USA)

Many real life problems lead to challenging PDE eigenvalue problems, e.g., vibrations of structures or calculation of energy levels in quantum mechanics. A lot of research is devoted to the so-called Adaptive Finite Element Method (AFEM) which allows discretization of the governing PDE, solving the finite dimensional algebraic eigenvalue problem and iteratively improving obtained numerical approximations. However, advanced approaches dedicated to solve these challenging eigenvalue problems require a unified framework bringing together: spectral and perturbation theory to derive a priori error estimators, a posteriori error analysis which enables deriving efficient and reliable error estimators which take into account various errors of different origins, iterative solvers and model reduction techniques to efficiently solve finite dimensional algebraic linear and nonlinear eigenvalue problems etc. This talk will discuss several attempts to achieve the above goal. In particular, we will discuss how the Cauchy integral-based approaches offer an attractive algorithmic framework when solving interior large-scale linear and nonlinear eigenvalue problems. Finally, we will illustrate behavior of presented methods with several numerical examples.

Deep Learning with Graph-Structured Data: A Mathematical Perspective

Jie Chen (IBM Research, USA)

Graphs are a mathematical abstraction as well as a structured organization of data, ubiquitously used in science and technology. In scientific computing, they model sparse matrices such that their partitioning and coarsening are vital ingredients in the solution of large linear systems; while in machine learning, graphs capture the pairwise relationship of entities and they offer supplementary information for building predictive models. With the resurgence of neural networks for their remarkable effectiveness in modeling the complex mapping between inputs and outputs, how one injects the structural information to enhance the predictive power of neural networks attracted surging interest recently. This lecture is a brief journey of the recent rise of graph neural networks (GNNs). I will introduce GNNs as a mathematical model, illustrate their uses, describe scalability challenges in training and inference, present solutions for massive graphs, and discuss more complex but practical scenarios including temporal evolution of the graphs and the learning of a hidden graph structure. Both the audiences familiarizing themselves with deep learning and those seeking a research subject will find this lecture informative.

Batch Normalization Preconditioning for Neural Network Training

Qiang Ye (University of Kentucky, USA)

Batch normalization (BN) is a ubiquitous method in deep neural network training that has been shown to decrease training time and improve generalization performance. Despite its success, BN is not theoretically well understood. It is not suitable for use with very small mini-batch sizes or online learning. In this talk, we will analyze the effects of mini-batch statistics of a hidden variable on the Hessian matrix of a loss function and propose a preconditioning method called Batch Normalization Preconditioning (BNP) to improve the conditioning of the Hessian. BNP implicitly uses a parameter transformation that is equivalent to normalizing the hidden variables. It reduces the condition number of the Hessian and hence accelerates convergence of training iterations. Compared with BN, one benefit is that BNP is not constrained on the mini-batch size and works in the online learning setting. We will present several experiments demonstrating competitiveness of BNP. Furthermore, we will discuss a connection to BN which provides theoretical insights on how BN improves training and how BN is applied to special architectures such as convolutional neural networks. The talk is based on a joint work with Susanna Lange and Kyle Helfrich.

Friday, May 6, 2022

|

8:00am

|

Yousef Saad #5

|

|

9:30am

|

Rachel Minster

|

|

10:45am

|

Mark Arnold

|

|

1:00pm

|

Women in STEM Panel

|

|

2:15pm

|

Contributed Talks

|

|

3:45pm

|

Closing Remarks

|

Randomized Parallel Algorithms for Tucker Decompositions

Rachel Minster (Wake Forest, USA)

Large-scale data frequently appears in data analysis and numerical computing applications, posing challenges in storage and computational costs. These datasets often possess inherent multidimensional structure that we can exploit to compress and store them in tensor form. The Tucker decomposition is one low-rank tensor decomposition that generalizes the matrix SVD to higher dimensions. Traditional algorithms used to compute Tucker decompositions can be computationally expensive as they involve computing the SVD of matrices with a large number of columns. In this talk, we will discuss randomized algorithms and probabilistic analysis for Tucker decompositions. We will also propose new parallel randomized algorithms that address important computational challenges. Using randomized matrix techniques, we accelerate a distributed-memory implementation of the Tucker decomposition to obtain an efficient algorithm that avoids communication. Specifically, we employ a new sketching method that exploits Kronecker structure to accelerate a key computation. We also present probabilistic analysis of the error resulting from the algorithm, as well as numerical results demonstrating the computational benefits of this approach.

Classical Gram-Schmidt without Reorthogonalization

Mark Arnold (University of Arkansas, USA)

In a wide variety of computing environments, the classical Gram-Schmidt algorithm (CGS) can be a very efficient implementation of the QR factorization of a thin (or square) matrix A. But in finite precision CGS suffers from such loss of orthogonality among the columns of Q as to render it generally useless without reorthogonalization. The modified Gram-Schmidt algorithm (MGS), whose sequential nature slows it in many compute environments, is backward stable for many problems (Ax=b, least squares, GMRES iterations, etc.). Our main result says that if A=QR is computed by CGS, and R is column diagonally dominant, then the loss of orthogonality in Q is about the same as for the MGS factorization. This suggests some new (block) methods, of which we will discuss a few, with some experimental results.

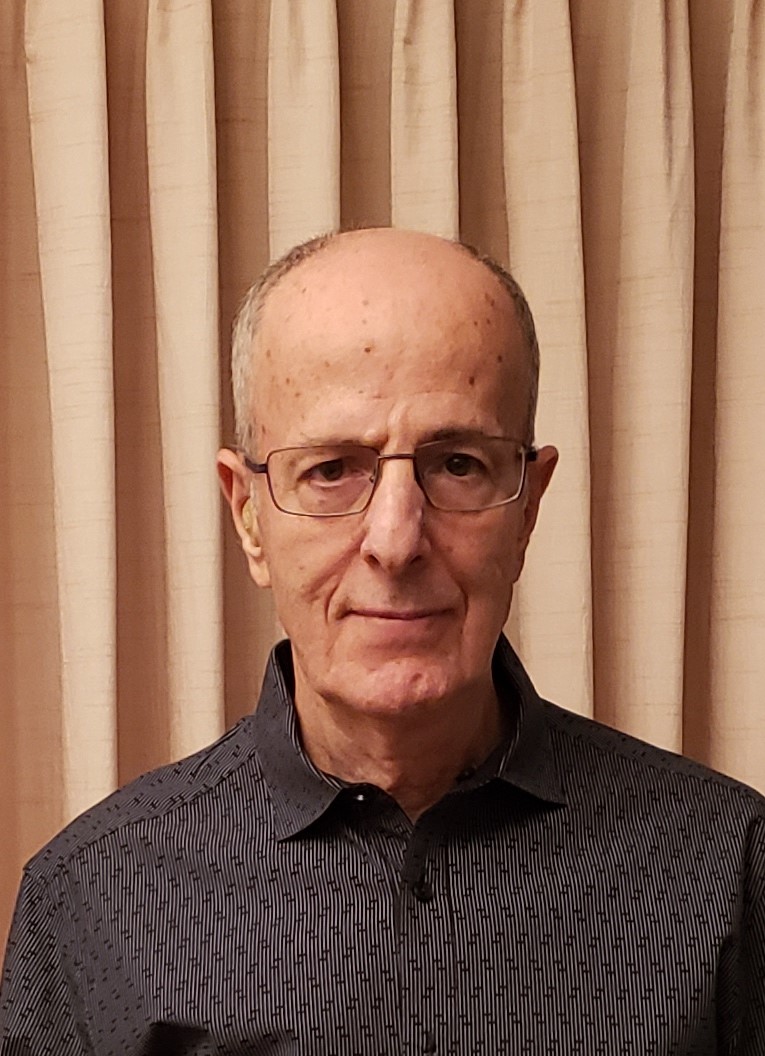

Yousef Saad is a College of Science and Engineering (CSE) distinguished professor with the department

of computer science and engineering at the University of Minnesota. He received the

"Doctorat d’Etat" from the university of Grenoble (France) in 1983. He joined the

university of Minnesota in 1990 as a Professor of computer science and a Fellow of

the Minnesota Supercomputer Institute. He was head of the department of Computer Science

and Engineering from January 1997 to June 2000, and became a CSE distinguished professor

in 2005. From 1981 to 1990, he held positions at the University of California at Berkeley,

Yale, the University of Illinois, and the Research Institute for Advanced Computer

Science (RIACS). His current research interests include: numerical linear algebra,

sparse matrix computations, iterative methods, parallel computing, numerical methods

for electronic structure, and linear algebra methods in data mining. He is the author

of two monographs and over 190 journal articles. He is also the developer or co-developer

of several software packages for solving sparse linear systems of equations and eigenvalue

problems including SPARSKIT, pARMS, ITSOL, and EVSL. Yousef Saad is a SIAM fellow

(class of 2010) and a fellow of the AAAS (2011).

Yousef Saad is a College of Science and Engineering (CSE) distinguished professor with the department

of computer science and engineering at the University of Minnesota. He received the

"Doctorat d’Etat" from the university of Grenoble (France) in 1983. He joined the

university of Minnesota in 1990 as a Professor of computer science and a Fellow of

the Minnesota Supercomputer Institute. He was head of the department of Computer Science

and Engineering from January 1997 to June 2000, and became a CSE distinguished professor

in 2005. From 1981 to 1990, he held positions at the University of California at Berkeley,

Yale, the University of Illinois, and the Research Institute for Advanced Computer

Science (RIACS). His current research interests include: numerical linear algebra,

sparse matrix computations, iterative methods, parallel computing, numerical methods

for electronic structure, and linear algebra methods in data mining. He is the author

of two monographs and over 190 journal articles. He is also the developer or co-developer

of several software packages for solving sparse linear systems of equations and eigenvalue

problems including SPARSKIT, pARMS, ITSOL, and EVSL. Yousef Saad is a SIAM fellow

(class of 2010) and a fellow of the AAAS (2011). Tim Davis is a Professor in the Computer Science and Engineering Department at Texas A&M University.

His primary scholarly contribution is the creation of widely-used sparse matrix algorithms

and software (including x=A\b in MATLAB). Davis is a Fellow of SIAM, ACM, and IEEE.

Tim Davis is a Professor in the Computer Science and Engineering Department at Texas A&M University.

His primary scholarly contribution is the creation of widely-used sparse matrix algorithms

and software (including x=A\b in MATLAB). Davis is a Fellow of SIAM, ACM, and IEEE. Erin Claire Carson is an assistant professor at Charles University, Czech Republic. She obtained her

PhD at the University of California - Berkeley under Demmel and Fox in 2015 on the

topic of theoretical analysis and practical implementation of communication-avoiding

Krylov subspace methods. Before joining Charles University, she was a postdoc at the

Courant Institute at NYU. Her research involves the analysis of matrix computations

and the development of parallel algorithms for large scale settings, with a particular

focus on mixed precision. Her recent work together with Higham on mixed precision

iterative refinement based on Krylov subspace methods forms the basis for the new

HPL-AI benchmark, on which today's top supercomputers already exceed exaflop performance.

Erin currently serves on the PRACE Access Committee, which advises the Board of Directors

concerning the allocation of supercomputing resources. She is involved with the U.S.

Exascale Computing Project as part of the multiprecision effort under the xSDK. Since

January 2022, she has served as a member of the scientific committee of the E-NLA

Seminar and as co-chair of the GAMM Activity Group on Numerical Linear Algebra.

Erin Claire Carson is an assistant professor at Charles University, Czech Republic. She obtained her

PhD at the University of California - Berkeley under Demmel and Fox in 2015 on the

topic of theoretical analysis and practical implementation of communication-avoiding

Krylov subspace methods. Before joining Charles University, she was a postdoc at the

Courant Institute at NYU. Her research involves the analysis of matrix computations

and the development of parallel algorithms for large scale settings, with a particular

focus on mixed precision. Her recent work together with Higham on mixed precision

iterative refinement based on Krylov subspace methods forms the basis for the new

HPL-AI benchmark, on which today's top supercomputers already exceed exaflop performance.

Erin currently serves on the PRACE Access Committee, which advises the Board of Directors

concerning the allocation of supercomputing resources. She is involved with the U.S.

Exascale Computing Project as part of the multiprecision effort under the xSDK. Since

January 2022, she has served as a member of the scientific committee of the E-NLA

Seminar and as co-chair of the GAMM Activity Group on Numerical Linear Algebra. James Nagy is Samuel Candler Dobbs Professor and Chair of the Department of Mathematics at Emory

University, and a Fellow of the Society of Industrial and Applied Mathematics (SIAM).

Dr. Nagy received a PhD in Applied Mathematics in 1991 from North Carolina State University.

Before joining Emory University in 1999 he had postdoctoral research fellowships at

the IMA at the University of Minnesota, with the NSF at the University of Maryland,

and he was on the faculty at Southern Methodist University. Dr. Nagy's research interests

include numerical linear algebra, structured matrix computations, numerical solution

of inverse problems, and image processing. Dr. Nagy's scholarship includes 120+ journal

publications, a book on Image Deblurring with SIAM, and textbook on Scientific Computing

published with Lulu. Dr. Nagy has given more than 175 lectures on his research in

24 countries. Dr. Nagy has been continuously funded from grants from NSF, AFOSR and

NIH. Dr. Nagy has so far directed 14 PhD theses and 16 undergraduate honors thesis

projects. Dr. Nagy has been recognized by numerous teaching awards, including the

Emory Williams Distinguished Teaching Award, the Emory Crystal Apple Award for Excellence

in Graduate Teaching, and the Emory College Professor for Distinguished Teaching.

James Nagy is Samuel Candler Dobbs Professor and Chair of the Department of Mathematics at Emory

University, and a Fellow of the Society of Industrial and Applied Mathematics (SIAM).

Dr. Nagy received a PhD in Applied Mathematics in 1991 from North Carolina State University.

Before joining Emory University in 1999 he had postdoctoral research fellowships at

the IMA at the University of Minnesota, with the NSF at the University of Maryland,

and he was on the faculty at Southern Methodist University. Dr. Nagy's research interests

include numerical linear algebra, structured matrix computations, numerical solution

of inverse problems, and image processing. Dr. Nagy's scholarship includes 120+ journal

publications, a book on Image Deblurring with SIAM, and textbook on Scientific Computing

published with Lulu. Dr. Nagy has given more than 175 lectures on his research in

24 countries. Dr. Nagy has been continuously funded from grants from NSF, AFOSR and

NIH. Dr. Nagy has so far directed 14 PhD theses and 16 undergraduate honors thesis

projects. Dr. Nagy has been recognized by numerous teaching awards, including the

Emory Williams Distinguished Teaching Award, the Emory Crystal Apple Award for Excellence

in Graduate Teaching, and the Emory College Professor for Distinguished Teaching. Alice Cortinovis is a PhD student at École Polytechnique Fédérale de Lausanne (EPFL), in Switzerland.

Her research interests lie in numerical linear algebra. In particular, she has worked

on randomized algorithms for trace estimation, algorithms for low-rank approximation

of matrices, and algorithms for computing functions of matrices with low-rank structure.

She holds a Bachelor and master's degree in mathematics from the University of Pisa

(Italy), where she also received a fellowship from Scuola Normale Superiore.

Alice Cortinovis is a PhD student at École Polytechnique Fédérale de Lausanne (EPFL), in Switzerland.

Her research interests lie in numerical linear algebra. In particular, she has worked

on randomized algorithms for trace estimation, algorithms for low-rank approximation

of matrices, and algorithms for computing functions of matrices with low-rank structure.

She holds a Bachelor and master's degree in mathematics from the University of Pisa

(Italy), where she also received a fellowship from Scuola Normale Superiore. Sara Pollock is an Associate Professor in the Department of Mathematics at the University of Florida.

She obtained her Ph.D. in Mathematics with a specialization in Computational Science

from UC San Diego in 2012, an MS in Applied Mathematics from the University of Washington

in 2008 and a BS in Mathematics from the University of New Mexico in 2007. Her research

is focused on the design and analysis of efficient and accurate numerical methods

for nonlinear and multiscale partial differential equations and for eigenvalue problems.

She has been funded by multiple grants from the NSF including a CAREER award in 2021.

Her work on PDE includes well-posedness and efficient solvers for nonlinear discrete

problems arising from physical and multi-physical systems. Recently her work has included

advances in the understanding and implementation of Anderson acceleration for numerical

PDE problems, and the development of novel extrapolation methods for eigenvalue problems.

Since joining UF in 2018, she founded and co-mentors the UF student chapter of the

Association for Women in Mathematics, and co-advises the UF student chapter of SIAM.

Sara Pollock is an Associate Professor in the Department of Mathematics at the University of Florida.

She obtained her Ph.D. in Mathematics with a specialization in Computational Science

from UC San Diego in 2012, an MS in Applied Mathematics from the University of Washington

in 2008 and a BS in Mathematics from the University of New Mexico in 2007. Her research

is focused on the design and analysis of efficient and accurate numerical methods

for nonlinear and multiscale partial differential equations and for eigenvalue problems.

She has been funded by multiple grants from the NSF including a CAREER award in 2021.

Her work on PDE includes well-posedness and efficient solvers for nonlinear discrete

problems arising from physical and multi-physical systems. Recently her work has included

advances in the understanding and implementation of Anderson acceleration for numerical

PDE problems, and the development of novel extrapolation methods for eigenvalue problems.

Since joining UF in 2018, she founded and co-mentors the UF student chapter of the

Association for Women in Mathematics, and co-advises the UF student chapter of SIAM. Agnieszka Międlar is an Associate Professor of Mathematics at the University of Kansas (KU). She got

a Masters in Computer Science from the Wroclaw University of Technology and PhD in

Mathematics from the Technical University of Berlin. Before joining KU she conducted

her research at the TU Braunschweig, EPF Lausanne and the University of Minnesota.

Her research focuses on numerical linear algebra, numerical analysis for partial differential

equations, and scientific computing, with an emphasis on iterative solvers for large-scale

linear systems and eigenvalue problems, and adaptive finite element methods. She collaborates

with colleagues from other areas of mathematics, natural sciences, and engineering

to develop advanced algorithms to solve complex real-life problems of global importance.

Międlar's most recent work is strongly motivated by emerging challenges in large-scale

(non)linear eigenvalue problems arising in breakthrough applications, e.g., electronic

structure calculations, and in developing resilient numerical linear algebra tools

for distributed and unreliable computing environments, e.g., for edge computing environments.

Her recent work has been supported by the Simons Foundation, the National Science

Foundation (NSF) and the Lawrence Livermore National Laboratory (LLNL).

Agnieszka Międlar is an Associate Professor of Mathematics at the University of Kansas (KU). She got

a Masters in Computer Science from the Wroclaw University of Technology and PhD in

Mathematics from the Technical University of Berlin. Before joining KU she conducted

her research at the TU Braunschweig, EPF Lausanne and the University of Minnesota.

Her research focuses on numerical linear algebra, numerical analysis for partial differential

equations, and scientific computing, with an emphasis on iterative solvers for large-scale

linear systems and eigenvalue problems, and adaptive finite element methods. She collaborates

with colleagues from other areas of mathematics, natural sciences, and engineering

to develop advanced algorithms to solve complex real-life problems of global importance.

Międlar's most recent work is strongly motivated by emerging challenges in large-scale

(non)linear eigenvalue problems arising in breakthrough applications, e.g., electronic

structure calculations, and in developing resilient numerical linear algebra tools

for distributed and unreliable computing environments, e.g., for edge computing environments.

Her recent work has been supported by the Simons Foundation, the National Science

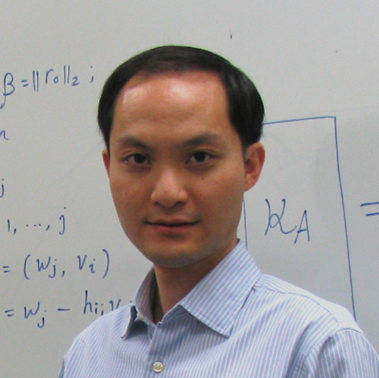

Foundation (NSF) and the Lawrence Livermore National Laboratory (LLNL). Jie Chen is a research staff member and a manager at the MIT-IBM Watson AI Lab, IBM Research.

He received the B.S. degree in mathematics with honors from Zhejiang University and

the Ph.D. degree in computer science from the University of Minnesota. His research

spans a broad spectrum of disciplines, including machine learning, statistics, scientific

computing, and parallel processing, with results published in prestigious journals

and conferences in the respective fields. His interests include graph-based deep learning,

kernel methods, dimension reduction, Gaussian processes, matrix functions, preconditioning,

graph partitioning, and tensor approximations. He was a recipient of SIAM Student

Paper Prize in 2009, a plenary speaker at the 2017 International Conference on Preconditioning

Techniques for Scientific and Industrial Applications, and a recipient of IBM Outstanding

Technical Achievement Award in 2018.

Jie Chen is a research staff member and a manager at the MIT-IBM Watson AI Lab, IBM Research.

He received the B.S. degree in mathematics with honors from Zhejiang University and

the Ph.D. degree in computer science from the University of Minnesota. His research

spans a broad spectrum of disciplines, including machine learning, statistics, scientific

computing, and parallel processing, with results published in prestigious journals

and conferences in the respective fields. His interests include graph-based deep learning,

kernel methods, dimension reduction, Gaussian processes, matrix functions, preconditioning,

graph partitioning, and tensor approximations. He was a recipient of SIAM Student

Paper Prize in 2009, a plenary speaker at the 2017 International Conference on Preconditioning

Techniques for Scientific and Industrial Applications, and a recipient of IBM Outstanding

Technical Achievement Award in 2018. Qiang Ye is a Professor of Mathematics at the University of Kentucky, where he has held the

Edwards Research Professorship and the University Research Professorship. His current

research interests include numerical analysis and machine learning. His research has

been continuously supported by NSF and he has been awarded the Marcel Neuts Prize

for best paper in Stochastic Models.

Qiang Ye is a Professor of Mathematics at the University of Kentucky, where he has held the

Edwards Research Professorship and the University Research Professorship. His current

research interests include numerical analysis and machine learning. His research has

been continuously supported by NSF and he has been awarded the Marcel Neuts Prize

for best paper in Stochastic Models. Rachel Minster is currently a Postdoctoral Fellow in the Department of Computer Science at Wake

Forest under the direction of Grey Ballard. She received her Bachelor of Science in

Mathematics from the University of North Carolina at Charlotte in 2016. She then received

her Masters degree in 2018 and Ph.D in Mathematics in 2021 from North Carolina State

University. Her research interests lie in the field of numerical linear algebra, and

her work is focused on two main topics: tensor decompositions and randomized algorithms.

Specifically, she develops and analyzes randomized algorithms in a variety of applications,

particularly involving low-rank approximation algorithms for tensors, or multiway

arrays.

Rachel Minster is currently a Postdoctoral Fellow in the Department of Computer Science at Wake

Forest under the direction of Grey Ballard. She received her Bachelor of Science in

Mathematics from the University of North Carolina at Charlotte in 2016. She then received

her Masters degree in 2018 and Ph.D in Mathematics in 2021 from North Carolina State

University. Her research interests lie in the field of numerical linear algebra, and

her work is focused on two main topics: tensor decompositions and randomized algorithms.

Specifically, she develops and analyzes randomized algorithms in a variety of applications,

particularly involving low-rank approximation algorithms for tensors, or multiway

arrays. Mark Arnold is an associate professor in the Department of Mathematical Sciences at the University

of Arkansas. He received PhD in Mathematics/Computational Mathematics at Northern

Illinois University in 1993 under Biswa Nath Datta on the topic of algorithms for

pole-placement (a control systems inverse eigenvalue problem). He was a postdoctoral

research associate in the Scalable Computing Laboratory in the USDOE Ames Laboratory

on the Iowa State University campus from 1991-1993, working on computational quantum

physics and parallel processing. He is currently the director of the interdisciplinary

Statistics & Analytics MS program housed in the University of Arkansas Graduate School.

His current research interests include: numerical linear algebra, control systems,

and graph theory.

Mark Arnold is an associate professor in the Department of Mathematical Sciences at the University

of Arkansas. He received PhD in Mathematics/Computational Mathematics at Northern

Illinois University in 1993 under Biswa Nath Datta on the topic of algorithms for

pole-placement (a control systems inverse eigenvalue problem). He was a postdoctoral

research associate in the Scalable Computing Laboratory in the USDOE Ames Laboratory

on the Iowa State University campus from 1991-1993, working on computational quantum

physics and parallel processing. He is currently the director of the interdisciplinary

Statistics & Analytics MS program housed in the University of Arkansas Graduate School.

His current research interests include: numerical linear algebra, control systems,

and graph theory.