Department of Mathematical Sciences

850 West Dickson Street, Room 309

University of Arkansas

Fayetteville, AR 72701

P 479-575-3351

F 479-575-8630

E-mail: math@uark.edu

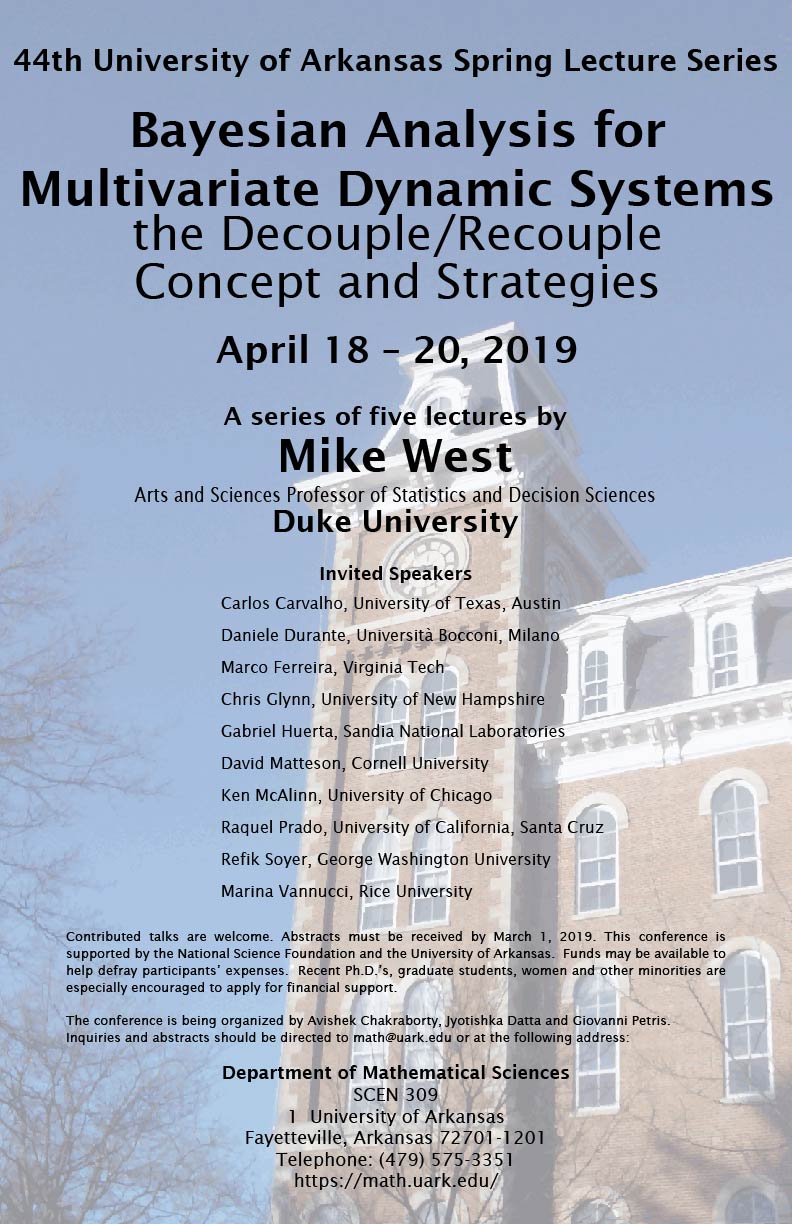

44th Spring Lecture Series

Bayesian Analysis for Multivariate Dynamic Systems: the Decouple/Recouple Concept and Strategies

April 18-20, 2019

Principal Speaker: Mike West (Department of Statistical Science, Duke University)

Invited Speakers

Carlos Carvalho (University of Texas, Austin)

Daniele Durante (Università Bocconi, Milano)

Marco Ferreira (Virginia Tech)

Chris Glynn (University of New Hampshire)

Gabriel Huerta (Sandia National Laboratories)

David Matteson (Cornell University)

Ken McAlinn (University of Chicago)

Raquel Prado (University of California, Santa Cruz)

Refik Soyer (George Washington University)

Marina Vannucci (Rice University)

Organizers

Avishek Chakraborty, Jyotishka Datta, Giovanni Petris

Abstracts & Supplementary Materials

Bayesian Analysis for Multivariate Dynamic Systems: The Decouple/Recouple Concept and Strategies

Mike West (The Arts & Sciences Professor of Statistics & Decision Sciences Department of Statistical Science, Duke University)

These lectures concern Bayesian statistical approaches to modelling and analysis of multivariate time series with a broad purview over problems of statistical analysis, structure assessment, monitoring, forecasting and decisions in increasingly large-scale dynamic systems. Following a first, introductory lecture on core historical developments in structured multivariate models, the following talks concern more recent, research-level developments in Bayesian modelling of multivariate time series. Each of these modelling areas uses a variant of the decouple/recouple concept for scaling coherent statistical analysis to increasingly high-dimensional and complex systems. Large-scale time series are increasingly common in many areas, promoting the need for refined modelling and computational approaches to enable scalability of statistical analysis. Development and application of the decouple/recouple concept has enabled advances in Bayesian state-space approaches with large-scale data in areas such as financial and commercial forecasting, as well as dynamic network studies. The modelling approaches are generic; resulting methodology is applicable broadly to problems of analysis, monitoring, forecasting, decisions and structure assessment, with potential to aid in quantitative analysis in other applications in socio-economics and the natural and engineering sciences. The essential basis in traditional, flexible, widely-used and reliable approaches to univariate time series analysis based on Bayesian state-space models provides easy access to inherently scalable multivariate extensions based on decouple/recouple strategies. These lectures convey this generality and highlight the approach in a range of applied contexts.

Lecture 1: Multivariate Time Series | YouTube Video Link

Forecasting, Decisions, Structure & Scalability The introductory lecture includes discussion of the background and motivating applied contexts, applied scope, prior approaches to modelling and dissecting the complexity of the structure of cross-series relationships and multivariate volatility reflecting inter-dependencies in dynamic systems, and the challenges of scaling Bayesian modelling approaches to increasingly high-dimensions. I will overview basic ideas of state-space modelling and Bayesian sequential analysis of time series arising from dynamical systems, standard approaches to multivariate stochastic volatility, questions of structured sparsity underlying currently state-of-the-art approaches, and computational aspects bearing on scaling to higher-dimensions. Applied contexts include policy-oriented macro-economic forecasting, forecasting to feed into dynamic financial portfolio investment decisions, and monitoring and anomaly detection, among other end-point goals. Reading available in Prado and West [2010] chapters 4 and 10, West and Harrison [1989] chapter 16, and selectively in Carvalho and West [2007], West [2010] and West [2013].

Lecture 2: Dynamic Dependency Network Models | YouTube Video Link

In recent years the structured modelling of multivariate time series using decouple/recouple ideas in the context of so-called dynamic dependency network models (DDNMs) has become standard technology, especially in stochastic dynamic models in the financial industry, core areas of macroeconomics and allied areas of business. DDNMs are Bayesian state-space models that characterize sparse patterns of dependence among multiple time series via extensions of traditional subset time-varying vector autoregressions, dynamic regressions and multivariate volatility models. The key features of DDNMs are (i) structural sparsity in representing cross-series linkages, and (ii) that they enable scaling to higher numbers of individual time series– scaling of computations that is linear in the number of time series. The theory of DDNMs shows how the individual series can be decoupled for sequential analysis, and then recoupled for applied forecasting and decision analysis. Decoupling allows fast, efficient analysis of each of the series in individual univariate models that are linked – for later recoupling – through a theoretical multivariate volatility structure defined by a sparse underlying graphical model. Computational advances are especially significant in connection with model uncertainty about the sparsity patterns among series that define these graphical models; Bayesian model averaging using discounting of historical information can build substantially on this computational advance. An extensive, detailed case study showcases the use of these models, and the improvements in forecasting and financial portfolio investment decisions that are achievable. Using a long series of daily international currency, stock indices and commodity prices, the case study includes evaluations of multi-day forecasts and Bayesian portfolio analysis with a variety of practical utility functions, as well as comparisons against commodity trading advisor benchmarks. Reading available in Zhao et al. [2016] (primary) and Nakajima and West [2013, 2017](secondary).

Lecture 3: Simultaneous Graphical Dynamic Models | YouTube Video Link

Building on the success and broader application of DDNMs, the major recent developments of a more general class of simultaneous graphical dynamic linear models (SGDLMs) has substantially advanced the ability to model and analyze many larger-scale systems of time series. A key and critical issue faced by DDNMs is the requirement to define an ordering of the individual, univariate series in a multivariate system. While in some contexts this is both natural and constructive– in terms of enabling incorporation of context and theory into models– it is more generally regarded as a challenging and potentially limiting requirement. Defining collectives of coupled systems of simultaneous dynamic models in the more recent SGDLM framework obviates these issues and opens up the scope for a major expansion in terms of the application area and, critically, scalability of analysis. Essentially, SGDLMs define an over-arching framework for sensitive and flexible modelling of dynamics and relationships at the level of individual univariate series coupled with similarly flexible structures for representing cross-series/multivariate stochastic and dynamic volatility. As with the subclass of DDNMs, the computational demands of the resulting Bayesian analysis scale linearly in the number of series. This lecture discusses the framework and decouple/recouple methodology of SGDLMs. The scope includes issues of variable selection, Bayesian computation for scalability, and a case study in exploring the resulting potential for improved short-term forecasting of large-scale volatility matrices. The case study concerns financial forecasting and portfolio optimization for decisions with a 400-dimensional series of daily stock prices. The analysis shows that the SGDLM forecasts volatilities and covolatilities well, making it ideally suited to contributing to quantitative investment strategies to improve portfolio returns. The analysis also identifies performance metrics linked to the sequential Bayesian filtering analysis that turn out to define a leading indicator of increased financial market stresses, comparable to but leading standard financial risk measures. Parallel computation using GPU implementations substantially advance the ability to fit and use these models. Reading available in Gruber and West [2016] and Gruber and West [2017].

Lecture 4: Dynamic Count Systems: Network Flow Modelling and Monitoring | YouTube Video Link

Traffic flow count data in networks arise in many applications, such as automobile or aviation transportation, certain directed social network contexts, and Internet studies. With a central example of Internet browser traffic flow through site-segments of an international news website, this lecture highlights advances in modelling of dynamic network flows based on the decouple/recouple concept. Bayesian analyses of two linked classes of models, in tandem, allow fast, scalable and interpretable Bayesian inference. A novel class of flexible but decoupled state-space models for streaming count data is able to adaptively characterize and quantify network dynamics efficiently in real-time. These models are used as emulators of more structured, time-varying gravity models that allow formal dissection of network dynamics. This yields interpretable inferences on traffic flow characteristics, and on dynamics in interactions among network nodes. Bayesian monitoring theory defines a strategy for sequential model assessment and adaptation in cases when network flow data deviates from model-based predictions. Exploratory and sequential monitoring analyses of evolving traffic on a network of web site-segments in e-commerce demonstrate the utility of this coupled Bayesian emulation approach to the analysis of streaming network count data. For large-scale networks, second-stage development involves customized dynamic generalized linear models (DGLMs) integrated into the network context via the decouple/recouple approach. Development is anchored in an expanded, higher-dimensional case-study of flows of visitors to the commercial news web site defining a long time series of node-node counts on over 56,000 node pairs. Characterizing inherent stochasticity in traffic patterns, understanding node-node interactions, adapting to dynamic changes in flows and allowing for sensitive monitoring to flag anomalies are central questions. The methodology of dynamic network DGLMs will be of interest and utility in broad ranges of dynamic network flow studies, and the underlying dynamic models will apply in studies of integer flows in dynamical systems more broadly. Reading available in Chen et al. [2017] and Chen et al. [2018].

Lecture 5: Dynamic Count Systems: Multiscale Models for Large-Scale Forecasting | YouTube Video Link

Problems of forecasting many related time series of counts arise in many areas, such as consumer behaviour in a range of socio-economic contexts, various natural and biological systems, and commercial and economic problems of analysis and forecasting of flows and discrete outcomes. Such data are particularly prevalent in consumer demand and sales contexts. With one motivating applied context of multi-step ahead forecasting of daily sales of many supermarket items across a system of outlets, this lecture discusses new classes of dynamic models for complex series of counts. The models address efficiency, efficacy and scalability of dynamic models based on the concept of decouple/recouple applied to multiple series that are individually represented via novel univariate state-space models for non-negative counts. The latter involves dynamic generalized linear models for binary and conditionally Poisson time series, with dynamic random effects for over-dispersion, allowing the use of dynamic covariates in both binary and non-zero count components. Sequential Bayesian analysis allows fast, parallel analysis of sets of decoupled time series. New multivariate models then enable information sharing in contexts when data at a more highly aggregated level provide more incisive inferences on shared patterns such as trends and seasonality. A novel multiscale approach – a further example of the concept of decouple/recouple in time series – enables information sharing across series. This incorporates cross-series linkages while insulating parallel estimation of univariate models, hence enables scalability in the number of series. Extension of these models is dynamic count mixture models that apply to forecast individual customer transactions, coupled with a novel probabilistic model for predicting counts of items per transaction. The resulting transactions-sales models allow the use of dynamic covariates in both trans-sales levels components, and can incorporate a diverse range of trend, seasonal, price, promotion, random effects and other outlet-specific predictors at the level of individual items. Decouple/recouple enabling effective multi-scale analysis is again central. The motivating case study context of many-item, multi-period, multi-step ahead supermarket sales forecasting provides examples that demonstrate improved forecast accuracy in a range of traditional and statistical metrics, while also illustrating the benefits of full probabilistic models for forecast accuracy evaluation and comparison. Reading available in Berry and West [2018] and Berry et al. [2018].

Downloadable Presentation [PDF]

Video Playlist

Dynamic Shrinkage Processes

David S. Matteson (Cornell University)

Abstract: We propose a novel class of dynamic shrinkage processes for Bayesian time series and regression analysis. Building upon a global-local framework of prior construction, in which continuous scale mixtures of Gaussian distributions are employed for both desirable shrinkage properties and computational tractability, we model dependence among the local scale parameters. The resulting processes inherit the desirable shrinkage behavior of popular global-local priors, such as the horseshoe prior, but provide additional localized adaptivity, which is important for modeling time series data or regression functions with local features. We construct a computationally efficient Gibbs sampling algorithm based on a Polya-Gamma scale mixture representation of the proposed process. Using dynamic shrinkage processes, we develop a Bayesian trend filtering model that produces more accurate estimates and tighter posterior credible intervals than competing methods and apply the model for irregular curve-fitting of minute-by-minute Twitter CPU usage data. In addition, we develop an adaptive time-varying parameter regression model to assess the efficacy of the Fama-French five-factor asset pricing model with momentum added as a sixth factor. Our dynamic analysis of manufacturing and healthcare industry data shows that with the exception of the market risk, no other risk factors are significant except for brief periods. https://arxiv.org/abs/1707.00763.

Objective Bayesian Analysis for Gaussian Hierarchical Models with ICAR spatial random effects

Marco Ferreira (Virginia Tech)

Abstract: Bayesian hierarchical models are commonly used for modeling spatially correlated areal data. However, choosing appropriate prior distributions for the parameters in these models is necessary and sometimes challenging. In particular, an intrinsic conditional autoregressive (CAR) hierarchical component is often used to account for spatial association. Vague proper prior distributions have frequently been used for this type of model, but this requires the careful selection of suitable hyperparameters. We derive several objective priors for the Gaussian hierarchical model with an intrinsic CAR component and discuss their properties. We show that the independence Jeffreys and Jeffreys-rule priors result in improper posterior distributions, while the reference prior results in a proper posterior distribution. We present results from a simulation study that compares frequentist properties of Bayesian procedures that use several competing priors, including the derived reference prior. We demonstrate that using the reference prior results in favorable coverage, interval length, and mean squared error. Finally, we illustrate our methodology with an application to 2012 housing foreclosure rates in the 88 counties of Ohio.

Presentation Download [PDF]

Bayesian predictive synthesis: foundations, theory, and applications

Ken McAlinn (University of Chicago)

Abstract: For nearly five decades, the field of forecast combination has grown exponentially. Its practicality and effectiveness in important real-world problems concerning forecasting, uncertainty, and decisions propels this. Concerned with the philosophical/theoretical underpinnings on which forecast combination methods and strategies are built upon, McAlinn and West (2018) developed a coherent theoretical framework for combining multiple forecast densities called Bayesian predictive synthesis (BPS). BPS not only generalizes existing forecast pooling and Bayesian model mixing methods, it expands the scope to dynamic contexts, allowing adaptation to time-varying biases, miscalibration, and dependencies among models or forecasters. Foundations of BPS, as well as its extensions to temporal settings, will be discussed, accompanied by new theoretical results and several applications that exemplify the effectiveness and utility of BPS for forecasting, inference, and decision making.

Bayesian Causal Forests

Carlos Carvalho (University of Texas at Austin)

Abstract: This paper develops a semi-parametric Bayesian regression model for estimating heterogeneous treatment effects from observational data. Standard nonlinear regression models, which may work quite well for prediction, can yield badly biased estimates of treatment effects when fit to data with strong confounding. Our Bayesian causal forest model avoids this problem by directly incorporating an estimate of the propensity function in the specification of the response model, implicitly inducing a covariate-dependent prior on the regression function. This new parametrization also allows treatment heterogeneity to be regularized separately from the prognostic effect of control variables, making it possible to informatively “shrink to homogeneity”, in contrast to existing Bayesian non-parametric and semi-parametric approaches.

Bayesian Models for inference on brain networks

Marina Vannucci (Rice University)

Abstract: Functional magnetic resonance imaging (fMRI) techniques, a common tool to measure neuronal activity by detecting blood flow changes, have experienced an explosive growth in the past years. Statistical methods play a crucial role in understanding and analyzing fMRI data. Bayesian approaches, in particular, have shown great promise in applications. Fully Bayesian approaches allow flexible modeling of spatial and temporal correlations in the data, as well as the integration of multimodal data. In this talk I will look at models for inference on brain connectivity. I will present a model for the analysis of temporal dynamics of functional networks in task-based fRMi data and a multisubject vector autoregressive (VAR) modeling approach for inference on effective connectivity.

Dealing with nuisance parameters in Bayesian model calibration

Kellin Rumsey & Gabriel Huerta (Sandia National Laboratories)

Abstract: We consider the problem of physical parameter estimation by combining experimental data with computer model simulations. The Bayesian Model Calibration (BMC) framework accommodates a wide variety of uncertainties, including model misspecification or model discrepancy, and is thus a useful tool for solving inverse problems. In the presence of a high-dimensional vector of nuisance parameters, the problem of inferring physical parameters is often poorly identified. We propose various statistical approaches for calibrating physical parameters in such situation. First, we consider regularization, the specification of hierarchical priors on the nuisance parameters, with the goal to preserve measurement uncertainty and to avoid over-fitting. Secondly, we consider modularization, an alternative to the full Bayesian approach which mimics the forward propagation of uncertainty by sampling the nuisance parameter via an alternative scheme, rather than updating them via full conditional distributions. The efficiency of these methods is illustrated with a comprehensive simulation study that accommodates for different scenarios of dimensionality for the nuisance parameters, along with different levels of complexity to infer the parameters of interest. Also, these methods are applied to a dynamic material setting to produce statistical inferences on the material properties of tantalum.

Presentation Download [PDF]

Bayesian methods for improved public policy in addressing homelessness

Chris Glynn (University of New Hampshire)

Abstract: Homelessness is a complex social problem with many causes. Public policy to address homelessness is often based on limited data and rigid statistical models that do not adequately quantify uncertainty or allow geographic variation in underlying factors. As a result, one size fits all policies are typically adopted. In this talk, I present a Bayesian dynamic modeling framework for homeless count data that addresses some of the data quality challenges. We develop a Dirichlet process mixture model that allows clusters of communities with similar features to exhibit common patterns of variation in homeless rates. A main finding of the research is that the expected homeless rate in a community increases sharply once median rental costs exceed one third of median income, providing empirical evidence for the widely used definition of a housing cost burden at 30.

Efficient PARCOR approaches for dynamic modeling of multivariate time series

Raquel Prado (University of California, Santa Cruz)

Abstract: The partial correlation function (PARCOR) provides a way to characterize time series processes. We present a Bayesian PARCOR modeling approach for fast and accurate inference in multivariate non-stationery time series settings. Our formulation is based on modeling the forward and backward partial autocorrelation coefficients of a multivariate time series process using multi-variate dynamic linear models. Computationally expensive Markov chain Monte Carlo schemes for posterior inference are avoided by obtaining approximate inference of the observational variance-covariance matrices and the multivariate time-varying PARCOR coefficients. Approximate posterior inference on the implied time-varying vector autoregressive (TV-VAR) coefficients can also be obtained using Whittle’s algorithm. Similarly, approximate inference on any other functions of these parameters such as the multivariate time-frequency spectra, coherence, and partial coherence can also be obtained. A key aspect of the dynamic multivariate PARCOR approach is that it requires multivariate DLM representations with state-space matrices of lower dimension than those required in commonly used multivariate non-stationery models such as TV-VAR models. Therefore, the multivariate PARCOR approach offers advantages over such frameworks, including computational feasibility for joint analysis of relatively large-dimensional multivariate time series, and interpretable time-frequency analysis in the multivariate context. The performance of the proposed dynamic multi-variate Bayesian PARCOR models is illustrated in extensive simulation studies and in the analysis of multivariate non-stationery time series data arising from neuroscience and environmental applications.

Exact Kalman Filter for Binary Time Series

Daniele Durante (Bocconi University)

Abstract: Non-Gaussian state-space models arise routinely in several applications. Within this framework, the binary time series setting provides a source of constant interest due to its relevance in a variety of studies. However, unlike Gaussian state-space models—where filtering and predictive distributions are available in closed-form and can be updated sequentially via tractable expressions—binary state-space models require either approximations or sub-optimal sequential Monte Carlo strategies for inference and prediction. This is due to the apparent absence of conjugacy between the Gaussian states and the probit or logistic likelihood induced by the observation equation for the binary data. In this talk, I will prove that when the focus is on dynamic probit models with Gaussian state variables, filtering and predictive distributions belong to the class of unified skew-normal variables and the associated parameters can be updated online via closed-form expressions. This result leads to the first exact Kalman filter for univariate and multivariate binary time series, which provides also methods to draw independent and identically distributed samples from the exact filtering and predictive distributions of the states, thereby improving Monte Carlo inference. A scalable and optimal particle filter which exploits the unified skew-normal properties is also developed and additional exact expressions for the smoothing distribution are provided. As outlined in an illustrative application, the proposed methods improve state-of-the-art strategies available in the literature.

Bayesian Modeling Strategies for Multivariate non-Gaussian Time Series

Refik Soyer (The George Washington University)

Abstract: Multivariate non-Gaussian time series of correlated observations are considered. In so doing, we focus on time series from multivariate counts and durations. Dependence among series arises as a result of sharing a common dynamic environment. We discuss characteristics of the resulting multivariate time series models and develop Bayesian inference for them using particle filtering and Markov chain Monte Carlo methods.

Presentation Download [PDF]References

- L. Berry and M. West. Bayesian forecasting of many count-valued time series. Submitted, 2018. arXiv:1805.05232.

- L. Berry, P. Helman, and M. West. Probabilistic forecasting of heterogeneous consumer transaction-sales time series. Technical Report, forthcoming, 2018. arXiv:18xx.xxxxx.

- C. M. Carvalho and M. West. Dynamic matrix-variate graphical models. Bayesian Analysis, 2:69–98, 2007. URL https://projecteuclid.org/euclid.ba/1340390064.

- X. Chen, K., D. Banks, R. Haslinger, J. Thomas, and M. West. Scalable Bayesian modeling, monitoring and analysis of dynamic network flow data. Journal of the American Statistical Association, published online July 10, 2017. doi: 10.1080/01621459.2017.1345742. URL http://www.tandfonline.com/doi/full/10.1080/01621459.2017.1345742. arXiv:1607.02655

- X. Chen, D. Banks, and M. West. Bayesian dynamic modeling and monitoring of network flows. Submitted, 2018. arXiv:1805.04667.

- L. F. Gruber and M. West. GPU-accelerated Bayesian learning in simultaneous graphical dynamic linear models. Bayesian Analysis, 11:125–149, 2016. doi: 10.1214/15-BA946. URL http://projecteuclid.org/euclid.ba/1425304898.

- L. F. Gruber and M. West. Bayesian forecasting and scalable multivariate volatility analysis using simultaneous graphical dynamic linear models. Econometrics and Statistics, 3:3–22, 2017. doi: 10.1016/j.ecosta.2017.03.003. URL http://www.sciencedirect.com/science/article/pii/ S2452306217300163. arXiv:1606.08291.

- J. Nakajima and M. West. Bayesian analysis of latent threshold dynamic models. Journal of Business & Economic Statistics, 31:151–164, 2013. doi: 10.1080/07350015.2012.747847. URL http://www.stat.duke.edu/~mw/MWextrapubs/NakajimaWest2013JBES.pdf.

- J. Nakajima and M. West. Dynamics and sparsity in latent threshold factor models: A study in multivariate EEG signal processing. Brazilian Journal of Probability and Statistics, 31:701– 731, 2017. doi: 10.1214/17-BJPS364. URL https://projecteuclid.org/euclid.bjps/1513328764. arXiv:1606.08292.

- R. Prado and M. West. Time Series: Modelling, Computation & Inference. Chapman & Hall/CRC Press, 2010. URL http://www.stat.duke.edu/~mw/Prado&WestBook/.

- M. West. Bayesian forecasting. In N. Balakrishnan, editor, Methods and Applications of Statistics in Business, Finance and Management Science, pages 72–84. Wiley, Hoboken NJ, 2010. URL http://www.stat.duke.edu/~mw/MWextrapubs/West2010.pdf.

- M. West. Bayesian dynamic modelling. In P. Damien, P. Dellaportes, N. G. Polson, and D. A. Stephens, editors, Bayesian Theory and Applications, pages 145–166. Clarendon: Oxford University Press, 2013. URL http://www.stat.duke.edu/~mw/MWextrapubs/WestAFMSbook2012.pdf.

- M. West and P. J. Harrison. Bayesian Forecasting & Dynamic Models. Springer, 1st edition, 1989. URL http://www.stat.duke.edu/~mw/West&HarrisonBook/.

- Z. Y. Zhao, M. Xie, and M. West. Dynamic dependence networks: Financial time series forecasting & portfolio decisions (with discussion). Applied Stochastic Models in Business and Industry, 32: 311–339, 2016. doi: 10.1002/asmb.2161.

Shared Posters

To protect student and faculty research, these posters are available as JPG image files. If you need a PDF copy to read for accessability related issues, please contact the poster presenters or math@uark.edu.

BaysHHM: Full Bayesian Inference for Hidden Markov Models

Luis Damiano (Statistics, Iowa State University), Michael Weylandt (Statistics, Rice University), Brian Peterson (Computational Finance and Risk Management, University of Washington)

Poster Download JPG

Hybrid Generalized Geographically Weighted Regression: Predicting Undergraduate Student Success

James Roddy (University of Arkansas, Fayetteville), Samantha Robinson (University of Arkansas, Fayetteville)

Poster Download JPG

Rice Panicle Segmentation from UAV Images Using Multivariate Gaussian Mixture Model

Md Abul Hayat (Electrical Engineering, University of Arkansas, Fayetteville), Jingxian Wu (Electrical Engineering, University of Arkansas, Fayetteville), Yingli Cao (Information and Electrical Engineering, Shenyang Agricultural University, Shenyang, China)